Robust Underwater State Estimation and Mapping

- 22 views

DISSERTATION DEFENSE

Author: Bharat Joshi

Advisor: Dr. Ioannis Rekleitis

Date: October 11, 2023

Time: 3 pm - 5 pm

Place: Innovation Center, Room 2277 & Virtual

Abstract:

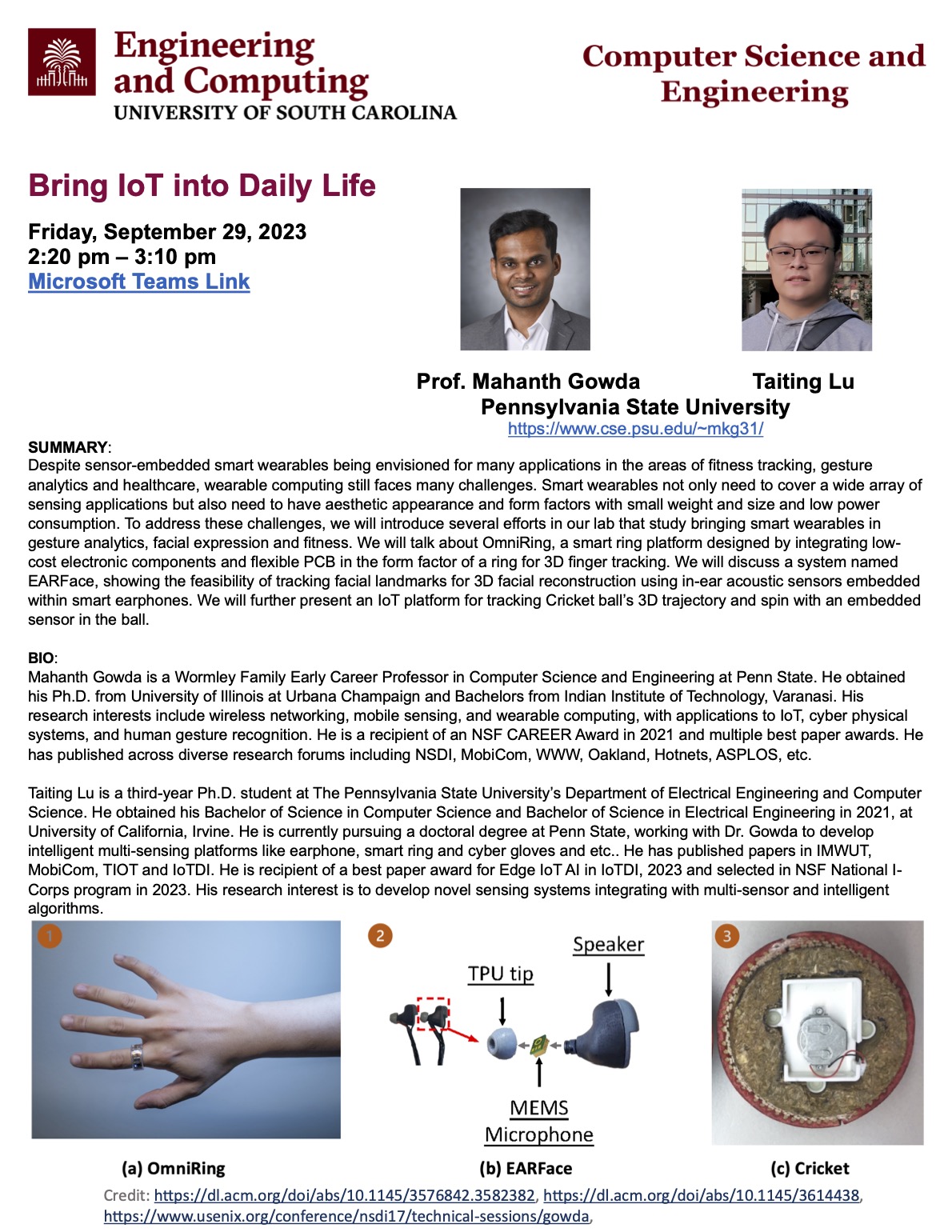

The ocean covers two-thirds of Earth, which is relatively unexplored compared to the landmass. Mapping underwater structures is essential for both archaeological and conservation purposes. This dissertation focuses on employing a robot team to map underwater structures using vision-based simultaneous localization and mapping (SLAM). The overarching goal of this research is to create a team of autonomous robots to map large underwater structures in a coordinated fashion. This requires maintaining an accurate robust pose estimate of oneself and knowing the relative pose of the other robots in the team. However, the GPS-denied and communication-constrained underwater environment, along with low visibility, poses several challenges for state estimation. This dissertation aims to diagnose the challenges of underwater vision-based state estimation algorithms and provide solutions to improve their robustness and accuracy. Moreover, robust state estimation combined with deep learning-based relative localization forms the backbone for cooperative mapping by a team of robots.

The performance of open-source state-of-the-art visual-inertial SLAM algorithms is compared in multiple underwater environments to understand the challenges of state estimation underwater. Extensive evaluation showed that consumer-level imaging sensors are ill-equipped to handle challenging underwater image formation, low intensity, and artificial lighting fluctuations. Thus, the GoPro action camera that captures high-definition video along with synchronized IMU measurements embedded within a single mp4 file is presented as a substitute. Along with enhanced images, fast sparse map deformation is performed for globally consistent mapping after loop closure. However, in some environments such as underwater caves, it is difficult to perform loop closure due to narrow passages and turbulent flows resulting in yaw drift over long trajectories. Tightly-coupled fusion of high frequency magnetometer measurements in optimization-based visual inertial odometry using IMU preintegration is performed producing a significant reduction in yaw drift. Even with good quality cameras, there are scenarios during underwater deployments where visual SLAM fails. Robust state estimation is proposed by switching between visual inertial odometry and a model-based estimator to keep track of the Aqua2 Autonomous Underwater Vehicle (AUV) during underwater operations. For mapping large underwater structures, cooperative mapping by a team of robots equipped with robust state estimation and capable of relative localization with each other is required. A deep learning framework is designed for real-time 6D pose estimation of an Aqua2 AUV with respect to observing camera trained only on synthetic images. This dissertation combines robust state estimation and accurate relative localization that contribute to mapping underwater structures using multiple AUVs.