Physical Layer Reliability for Air-Ground and Air-Air Networking

- 58 views

In-Person Meeting Location:

Storey Innovation Center 1400

Live Meeting Link for Virtual Audience

Abstract:

Aviation is growing rapidly, and the need for reliable and robust wireless signaling for communications, navigation, and surveillance is growing accordingly. As with all communication systems, the physical layer (PHY) forms the foundation—higher-layer operation is irrelevant if the PHY fails. In this talk, we briefly describe the growth in aviation and in related areas of wireless communications, some nascent applications and national/international programs aimed to support these applications, and then turn our attention to the PHY, where we describe some of the unique challenges of aviation networking, focusing on the air-ground and air-air channels themselves. These elements of the communication system can be rapidly time-varying, distorting, and lossy, hence quantification of channel effects is critical to enable design of effective PHY techniques to ameliorate them. We show example results from a prior and recently-completed NASA projects that illustrate some of these challenges. The talk concludes with a summary and identification of some key future work.

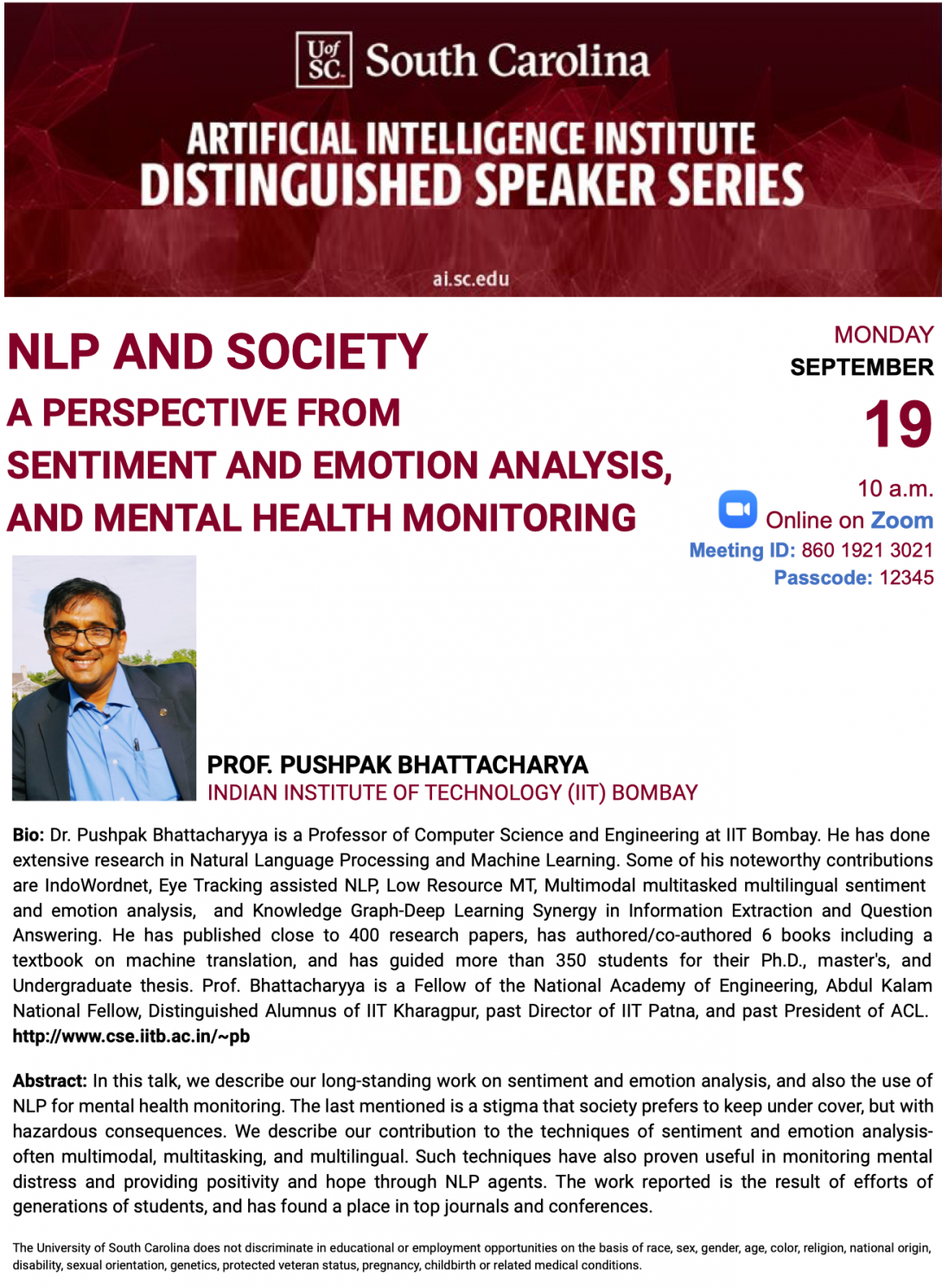

Speaker's Bio:

David W. Matolak received the B.S. degree from The Pennsylvania State University, M.S. degree from The University of Massachusetts, and Ph.D. degree from The University of Virginia, all in electrical engineering. He has over 25 years’ experience in communication system research, development, and deployment, with industry, government institutions, and academia, including AT&T Bell Labs, L3 Communication Systems, MITRE, and Lockheed Martin. He has over 250 publications and nine patents. He was a professor at Ohio University (1999-2012), and since 2012 has been a professor at the University of South Carolina. He has been Associate Editor for several IEEE journals, and has delivered several dozen invited presentations at a variety of international venues. His research interests are radio channel modeling, communication techniques for non-stationary fading channels, and secure and covert communications. Prof. Matolak is a Fellow of the IEEE, a member of standards groups in RTCA and ITU, and a member of Eta Kappa Nu, Sigma Xi, Tau Beta Pi, URSI, ASEE, and AIAA.