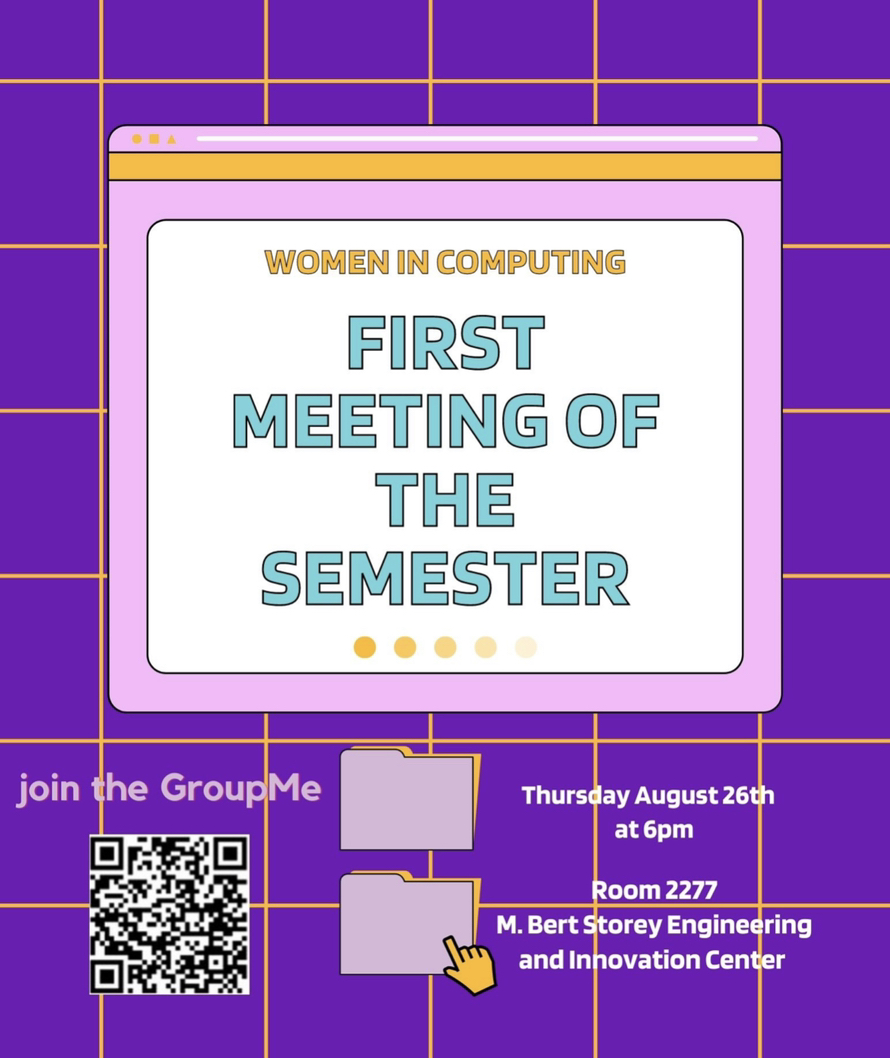

Women in Computing First Meeting of the Semester

- 27 views

DISSERTATION DEFENSE

Department of Computer Science and Engineering

University of South Carolina

Towards More Trustworthy Deep Learning: Accurate, Resilient, and Explainable Countermeasures Against Adversarial Examples

Author : Fei Zuo

Advisor : Dr. Qiang Zeng

Date : Aug 12, 2021

Time : 11:00am

Place : Virtual Defense

Abstract

Despite the great achievements made by neural networks on tasks such as image classification, they are brittle and vulnerable to adversarial example (AE) attacks. Along with the prevalence of deep learning techniques, the threat of AEs attracts increasingly attentions since it may lead to serious consequences in some vital applications such as disease diagnosis.

To defeat attacks based on AEs, both detection and defensive techniques attract the research community’s attention. While many countermeasures against AEs have been proposed, recent studies show that the existing detection methods usually goes ineffective when facing adaptive AEs. In this work, we exploit AEs by identifying their noticeable characteristics.

First, we noticed that L2 adversarial perturbations are among the most effective but difficult-to-detect attacks. How to detect adaptive L2 AEs is still an open question. At the same time, we find that, by randomly erasing some pixels in an L2 AE and then restoring it with an inpainting technique, the AE, before and after the steps, tends to have different classification results, while a benign sample does not show this symptom. We thus propose a novel AE detection technique, Erase-and-Restore (E&R), that exploits the intriguing sensitivity of L2 attacks. Comprehensive experiments conducted on standard image datasets show that the proposed detector is effective and accurate. More importantly, our approach demonstrates strong resilience to adaptive attacks. We also interpret the detection technique through both visualization and quantification.

Second, previous work considers that it is challenging to properly alleviate the effect of the heavy corruptions caused by L0 attacks. However, we argue that the uncontrollable heavy perturbation is

an inherent limitation of L0 AEs, and thwart such attacks. We thus propose a novel AE detector by converting the detection problem into a comparison problem. In addition, we show that the pre-processing technique used for detection can also work as an effective defense, which has a high probability of removing the adversarial influence of L0 perturbations. Thus, our system demonstrates not only high AE detection accuracies, but also a notable capability to correct the classification results.

Finally, we propose a comprehensive AE detector which systematically combines the two detection methods to thwart all categories of widely discussed AEs, i.e., L0, L2, and L∞ attacks. By acquiring the both strengths from its assembly components, the new hybrid AE detector is not only able to distinguish various kinds of

AEs, but also has a very low false positive rate on benign images. More significantly, through exploiting the noticeable characteristics of AEs, the proposed detector is highly resilient to adaptive attack, filling a critical gap in AE detection.

When: Tuesday, April 27, 11:00-12:00

https://zoom.us/j/98717098004?pwd=T3lPSGZ2K1pKTllQaWhRMDBtNVcrUT09

Speaker: Dr. Christian O’Reilly, McGill University, Canada

Talk abstract: Modeling is the bedrock on which science and technology have been built. Nowadays, almost every part of manufactured objects – may it be a supercomputer or a simple light bulb -- is modeled and simulated for us to gain a comprehensive understanding of how it works and how it will react under different conditions. Compared to human-made objects, our ability to get a grip on complex biological systems such as the brain has been hindered by these systems being black boxes which inner workings were mostly unknown. As we gain more insights on the mechanisms at play, our capacity to model and simulate these systems increases and further shed light on their remaining mysteries. In parallel, as the advances in medicine and science provide us with a finer appreciation of these biological systems, it also generates more intricate challenges. Tackling these new problems often requires integrating many sources of knowledge across fields and scales, from slow-evolving social factors to millisecond molecular interactions. Understanding complex multi-factorial and multidimensional neurodevelopmental issues like those present in the autistic spectrum disorder is such a problem. In this context, setting up a solid analytical framework empowered by modeling and simulation is even more important. In the first half of this talk I will go over some of my experiences in analyzing and modeling neuronal systems at different scales, from the macroscopic whole-brain scale to the microscopic cellular scale. Then, in the second part, building on these experiences I will make a case for the importance of systematically benchmarking the different aspects of the brain across scales and integrating such knowledge into analytical tools that we can use for scientific discoveries and clinical decisions. Speaker bio (short): Christian O’Reilly (Google Scholar) received his B.Ing (electrical eng.; 2007), his M.Sc.A. (biomedical eng.; 2011), and his Ph.D. (biomedical eng.; 2012) from the École Polytechnique de Montréal where he worked under the mentoring of Pr. R. Plamondon to apply pattern recognition and machine learning to predict brain stroke risks. He was later a postdoctoral fellow in Pr. T. Nielsen’s laboratory at the Center for Advanced Research in Sleep Medicine of the Hôpital du Sacré-Coeur/Université de Montréal (2012-2014) and then a NSERC postdoctoral fellow at McGill's Brain Imaging Center (2014-2015) where he worked in Pr. Baillet’s laboratory on characterizing EEG sleep transients, their sources, and their functional connectivity. During this period, he also was a visiting scholar in Pr. K. Friston's laboratory at the University College of London to study effective connectivity during sleep transients using dynamic causal modeling, an approach based on the Bayesian inversion of neural mass models. He later took on a 6-month fellowship with the Pr. M. Elsabbagh on functional connectivity in autism after which he moved to Switzerland to work for the Blue Brain project (Pr. S. Hill; EPFL; 2015-2018) where he led efforts on large-scale biophysically detailed modeling of the thalamocortical loop. Since 2020, he resumed his collaboration with the Dr. Elsabbagh as a research associate at the Azrieli Centre for Autism Research (McGill) where he is studying brain connectivity in autism and related neurodevelopmental disorders.